Learning to Dress 3D People in Generative Clothing

Qianli Ma, Jinlong Yang, Anurag Ranjan, Sergi Pujades, Gerard Pons-Moll, Siyu Tang, and Michael. J. Black

Computer Vision and Pattern Recognition (CVPR) 2020, Seattle, WA

Abstract

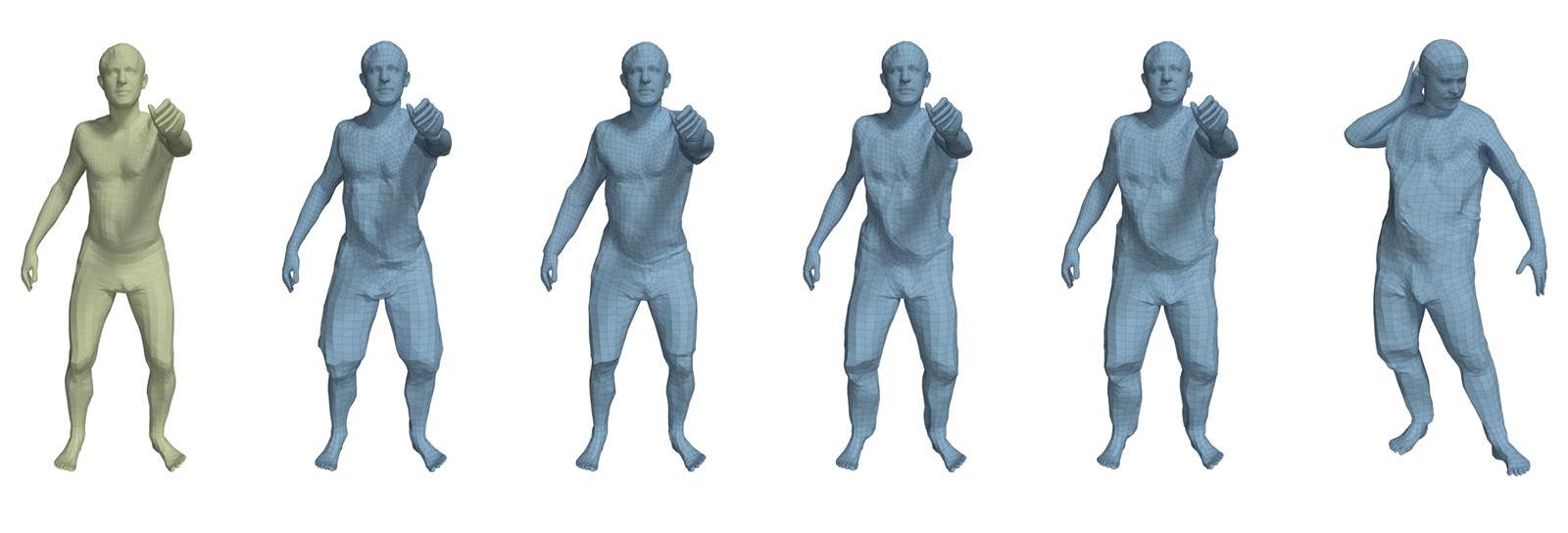

Three-dimensional human body models are widely used in the analysis of human pose and motion. Existing models, however, are learned from minimally-clothed 3D scans and thus do not generalize to the complexity of dressed people in common images and videos. Additionally, current models lack the expressive power needed to represent the complex non-linear geometry of pose-dependent clothing shape. To address this, we learn a generative 3D mesh model of clothed people from 3D scans with varying pose and clothing. Specifically, we train a conditional Mesh-VAE-GAN to learn the clothing deformation from the SMPL body model, making clothing an additional term on SMPL. Our model is conditioned on both pose and clothing type, giving the ability to draw samples of clothing to dress different body shapes in a variety of styles and poses. To preserve wrinkle detail, our Mesh-VAE-GAN extends patchwise discriminators to 3D meshes. Our model, named CAPE, represents global shape and fine local structure, effectively extending the SMPL body model to clothing. To our knowledge, this is the first generative model that directly dresses 3D human body meshes and generalizes to different poses. The model, code and data are available for research purposes at this website.

More Information

News

09/11/2021 CVPR 2022 Update on ethics:

Following CVPR 2022 recommendations on the use of datasets, we would like to declare that:

29/10/2021 We now provide packed CAPE data that are compatible with POP and SCALE. Please check out the bottom of the download page for more information.

12/10/2021 Check out our new work at ICCV 2021, trained with CAPE data:

- POP: a point-based, unified model for multiple subjects and outfits that can turn a single, static 3D scan into an animatable avatar with natural pose-dependent clothing deformations.

15/04/2021 Check out our new papers to appear in CVPR 2021, both use CAPE data!

- SCALE: Modeling pose-dependent shapes of clothed humans explicitly with hundreds of articulated surface elements: the clothing deforms naturally even in the presence of topological change!

- SCANimate: creating an avatar with pose-dependent clothing deformation from raw scans without template surface registration!

07/07/2020 20 sequences of high quality raw scans for several subjects are available (upon request) now! Please first register on our website, and send us the signed consent form (available in the "Downloads" section once logged in) for raw scan data.

12/06/2020 CAPE dataset is released! Please register first to access the downloads.

Citing the CAPE Model and Dataset

If you find the CAPE model and dataset useful to your research, please cite our work:

@inproceedings{CAPE:CVPR:20,

title = {{Learning to Dress 3D People in Generative Clothing}},

author = {Ma, Qianli and Yang, Jinlong and Ranjan, Anurag and Pujades, Sergi and Pons-Moll, Gerard and Tang, Siyu and Black, Michael J.},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

month = June,

year = {2020},

month_numeric = {6}}

If you use the CAPE dataset, please also reference ClothCap:

@article{Pons-Moll:Siggraph2017,

title = {ClothCap: Seamless 4D Clothing Capture and Retargeting},

author = {Pons-Moll, Gerard and Pujades, Sergi and Hu, Sonny and Black, Michael},

journal = {ACM Transactions on Graphics, (Proc. SIGGRAPH)},

volume = {36},

number = {4},

year = {2017},

note = {Two first authors contributed equally},

crossref = {},

url = {http://dx.doi.org/10.1145/3072959.3073711}

}Contact

For questions, please contact cape@tue.mpg.de.

For commercial licensing, please contact ps-licensing@tue.mpg.de.